torch-log-wmse: Audio Quality Metric and Loss Function Library

Project Overview

| GitHub Stats | Value |

|---|---|

| Stars | 26 |

| Forks | 1 |

| Language | Python |

| Created | 2024-03-28 |

| License | Apache License 2.0 |

Introduction

The torch-log-wmse project provides a PyTorch implementation of the logWMSE (logarithm of frequency-weighted Mean Squared Error) metric, originally developed by Iver Jordal of Nomono. This metric and loss function are designed to evaluate and improve the quality of audio signals, particularly addressing the limitations of traditional audio metrics, such as the inability to handle digital silence targets. It can be used both as a metric to assess audio quality and as a loss function for training audio separation and denoising models. Installing it is straightforward with pip install torch-log-wmse, making it a valuable tool for audio processing tasks.

Key Features

The torch-log-wmse project implements the logWMSE (logarithm of frequency-weighted Mean Squared Error) metric and loss function for audio signals, originally proposed by Iver Jordal of Nomono. Here are the key features:

Main Capabilities

- Custom Metric and Loss Function: logWMSE calculates the logarithm of a frequency-weighted Mean Squared Error, addressing shortcomings of common audio metrics, especially supporting digital silence targets.

- Usage in Training Models: It can be used as a loss function for training audio separation and denoising models.

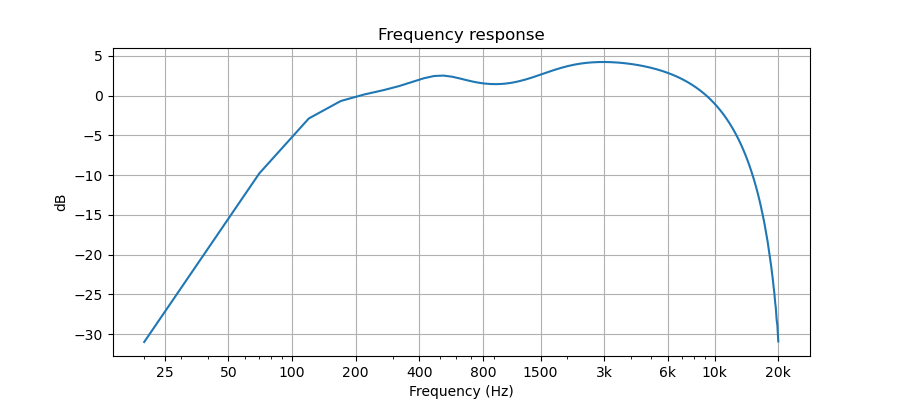

- Frequency Weighting: The metric applies frequency weighting to align with human hearing sensitivity, giving more weight to frequencies humans are more sensitive to.

Input Requirements

- Accepts three torch tensors: unprocessed audio, processed audio, and target audio.

- Each tensor has dimensions for batch size, audio stems (sources), audio channels, and samples.

Key Attributes

- Supports Digital Silence: Unlike other metrics, it handles digital silence targets.

- Scaled for Human Hearing: Logarithmic scaling reflects human hearing sensitivity and avoids small value range issues of MSE.

- Scale-Invariant: The metric remains consistent even if all inputs are scaled by the same amount.

Limitations

- Not invariant to arbitrary scaling, polarity inversion, or offsets in estimated audio.

- Does not fully model human auditory perception, such as auditory masking.

Installation and Usage

- Can be installed via

pip install torch-log-wmse. - Example usage provided in the README shows how to instantiate and use the logWMSE metric.

This project is licensed under the Apache License 2.0 and welcomes contributions.

Real-World Applications

Audio Source Separation

You can use torch-log-wmse as a loss function to train models for audio source separation. For example, if you are separating a mixed audio signal into vocals, drums, bass, and other instruments, you can calculate the logWMSE between the unprocessed mixed audio, the separated audio stems, and the target clean audio.

## Example: Audio source separation

unprocessed_audio = torch.rand(4, 2, 44100) # Batch size 4, stereo, 1 second

processed_audio = torch.rand(4, 4, 2, 44100) # Batch size 4, 4 stems, stereo, 1 second

target_audio = torch.rand(4, 4, 2, 44100) # Batch size 4, 4 stems, stereo, 1 second

log_wmse = LogWMSE(audio_length=1.0, sample_rate=44100)

loss = log_wmse(unprocessed_audio, processed_audio, target_audio)

print(loss) # Output: The calculated logWMSE lossAudio Denoising

For denoising audio, you can use torch-log-wmse to evaluate and train models that remove noise from an audio signal.

## Example: Audio denoising

unprocessed_audio = torch.rand(4, 2, 44100) # Batch size 4, stereo, 1 second

processed_audio = torch.rand(4, 1, 2, 44100) # Batch size 4, 1 stem (denoised), stereo, 1 second

target_audio = torch.rand(4, 1, 2, 44100) # Batch size 4, 1 stem (clean), stereo, 1 second

log_wmse = LogWMSE(audio_length=1.0, sample_rate=44100)

loss = log_wmse(unprocessed_audio, processed_audio, target_audio)

print(loss) # Output: The calculated logWMSE lossExploring and Benefiting from the Repository

- Installation: Install the package using

pip install torch-log-wmse. - Usage: Use the

LogWMSEclass to calculate the logWMSE metric or loss function for your audio processing tasks.

Conclusion

Key Points:

- Custom Metric and Loss Function:

logWMSEis designed for audio quality evaluation and training audio separation and denoising models, addressing shortcomings of common metrics like MSE. - Digital Silence Support: It supports digital silence targets, overcoming a limitation of other audio metrics.

- Human Hearing Alignment: Scale-invariant and logarithmic, aligning with human hearing sensitivity and frequency weighting.

- Multi-Input Capability: Requires unprocessed, processed, and target audio inputs for comprehensive evaluation.

- Limitations: Not invariant to arbitrary scaling, polarity inversion, or offsets; does not fully model human auditory perception.

Future Potential:

- Enhanced Audio Models: Can improve the performance of audio separation and denoising models by providing a more accurate and relevant loss function.

- Broader Applications: Potentially applicable in various audio processing tasks beyond separation and denoising, such as speech enhancement and music restoration.

- Contributions and Improvements: Open to contributions for further enhancements and new features, potentially addressing current limitations.

For further insights and to explore the project further, check out the original crlandsc/torch-log-wmse repository.

Attributions

Content derived from the crlandsc/torch-log-wmse repository on GitHub. Original materials are licensed under their respective terms.

Stay Updated with the Latest AI & ML Insights

Subscribe to receive curated project highlights and trends delivered straight to your inbox.