Eureka ML Insights: Evaluation Framework for Generative Models

Project Overview

| GitHub Stats | Value |

|---|---|

| Stars | 50 |

| Forks | 4 |

| Language | Python |

| Created | 2024-07-18 |

| License | Apache License 2.0 |

Introduction

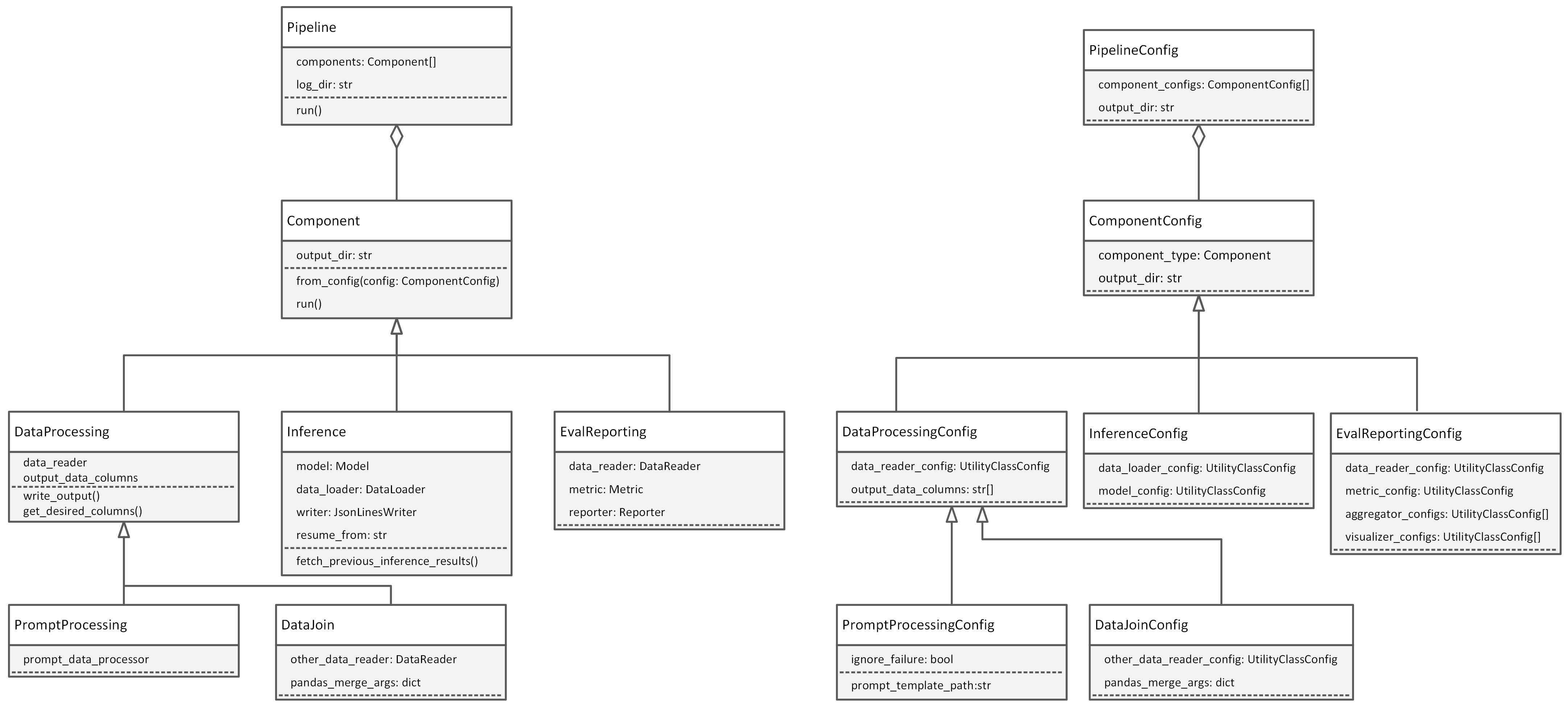

Eureka ML Insights is a framework designed to facilitate reproducible evaluations of generative models through various benchmarks and metrics. It enables researchers and practitioners to create custom pipelines for data processing, inference, and evaluation, while also offering pre-defined evaluation pipelines for essential benchmarks. By streamlining the evaluation process, Eureka ML Insights aids in efficiently assessing capabilities such as geometric reasoning, multimodal question answering, and object recognition. This framework is a valuable tool for those aiming to conduct thorough and consistent evaluations of generative models in their research or practical applications.

Key Features

The Eureka ML Insights framework facilitates reproducible evaluations of generative models using various benchmarks and metrics. It supports custom pipelines for data processing, inference, and evaluation, and includes pre-defined pipelines for key benchmarks. The project features benchmarks across multiple modalities, such as geometric reasoning, multimodal QA, object recognition, and toxicity detection. It offers tools for data and prompt processing, inference, and evaluation reporting. Installation is supported via pip and Conda, and the framework encourages contributions with detailed guidelines. The project also emphasizes responsible AI considerations, focusing on fairness, reliability, and safety in model evaluations.

Real-World Applications

The ’eureka-ml-insights’ project can be utilized in various practical ways. Researchers can evaluate generative models using benchmarks like GeoMeter for geometric reasoning or Toxigen for toxicity detection. Practitioners can define custom pipelines for data processing, inference, and evaluation, facilitating reproducible experiments. For example, running the FlenQA pipeline helps assess long-context multi-hop QA models. Users benefit by exploring pre-defined pipelines, customizing configurations, and utilizing comprehensive evaluation metrics. Installation is straightforward via pip or Conda, enabling easy setup. The repository encourages contributions, enhancing its utility and adaptability for diverse AI model evaluations.

Conclusion

‘Eureka ML Insights’ enhances reproducible evaluations of generative models using various benchmarks and metrics. It supports custom data processing, inference, and evaluation pipelines, and provides pre-defined evaluation pipelines. The framework’s future potential lies in expanding benchmarks and integrating responsible AI evaluations, focusing on fairness, safety, and reliability, thereby fostering collaborative progress in AI research and deployment.

For further insights and to explore the project further, check out the original microsoft/eureka-ml-insights repository.

Attributions

Content derived from the microsoft/eureka-ml-insights repository on GitHub. Original materials are licensed under their respective terms.

Stay Updated with the Latest AI & ML Insights

Subscribe to receive curated project highlights and trends delivered straight to your inbox.